Orbit

Cross-cultural collaboration tool for Researchers

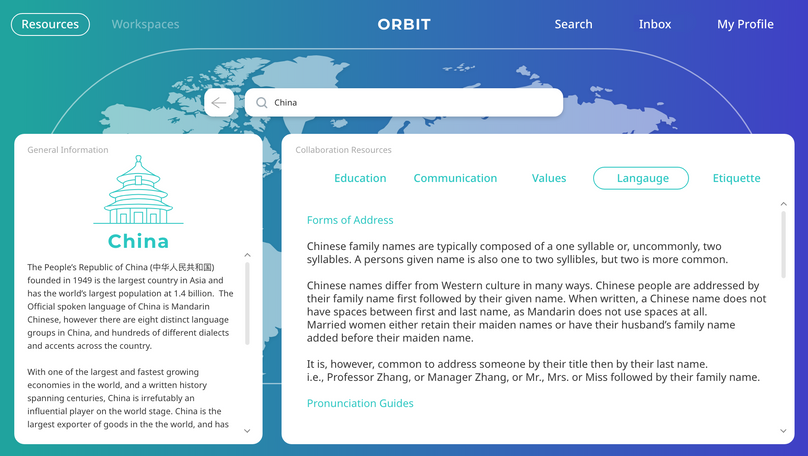

Welcome page for the Orbit web tool.

Project Description

Orbit is a cross-cultural collaboration tool for researchers to find others interested in the same research topic, not dependent on whom they know or where they are located. My team focused on addressing bias the researchers bring and the bias the webtool may be designed to have.

Motivation

Within this group project, I personally wanted to focus on bias as it is a topic I find myself confronting as a person of color, a woman, and an artist. I am aware that bias exists within higher academia, including among educators and researchers.

Researchers have the wonderful opportunity to collaborate with others in their field, but I am concerned that personal biases can hinder not only what they research, but also whom they choose as a collaborator.

Skills used

-

Design Research

-

interviews/social engagement

-

literary research

-

Research

Interviews

First, we made sure to ask the head collaborators on the Web-tool what they envisioned the web-tool doing. They wanted a collaboration tool that would make finding others who are interested in working on a specific topic, that didn't require going through networks or being in the same country.

From that, we realized that the community they were looking to give this tool to, might need to check their own biases before using the tool so they can get the most out of the tool.

Literary Research

For literary research, we found articles that tie biases to both higher education and interfaces. Bias training is commonly used to fight these issues. Other names include; unconscious bias training, implicit bias training and sensitivity training.

Bias training "has to be on-going and long term. It is impossible to change other's behaviors, stereotypes, and perceptions via one-day training."

How do we measure whether the training is effective

or not?

Original Solutions

Our original idea was to restrict identity information on the profiles to eliminate causes of bias by either entirely removing elements such as name, gender, age, etc. or having users create a cartoon character of themselves.

The next iteration was when creating an account you have to go through a brief bias awareness segment, and then if you go through a longer more intensive bias training segment you would gain some sort of visual certification on your account (like a check) to give people an incentive to do it.

Problems

-

After researching, we found that telling people they should do an implicit bias test or training tends to make their response (whether good or bad) a volatile one.

-

standard user profiles made the webtool seem more credible.

-

not having people's information would just delay the biased reactions.

-

if people have to take a training test that would deter them from using the website.

-

making the training optional, but feel mandatory through the use of incentives added an elitist attitude and again made people want to use the site less.